📌 TOPINDIATOURS Breaking ai: Moving past speculation: How deterministic CPUs deliv

For more than three decades, modern CPUs have relied on speculative execution to keep pipelines full. When it emerged in the 1990s, speculation was hailed as a breakthrough — just as pipelining and superscalar execution had been in earlier decades. Each marked a generational leap in microarchitecture. By predicting the outcomes of branches and memory loads, processors could avoid stalls and keep execution units busy.

But this architectural shift came at a cost: Wasted energy when predictions failed, increased complexity and vulnerabilities such as Spectre and Meltdown. These challenges set the stage for an alternative: A deterministic, time-based execution model. As David Patterson observed in 1980, “A RISC potentially gains in speed merely from a simpler design.” Patterson’s principle of simplicity underpins a new alternative to speculation: A deterministic, time-based execution model."

For the first time since speculative execution became the dominant paradigm, a fundamentally new approach has been invented. This breakthrough is embodied in a series of six recently issued U.S. patents, sailing through the U.S. Patent and Trademark Office (USPTO). Together, they introduce a radically different instruction execution model. Departing sharply from conventional speculative techniques, this novel deterministic framework replaces guesswork with a time-based, latency-tolerant mechanism. Each instruction is assigned a precise execution slot within the pipeline, resulting in a rigorously ordered and predictable flow of execution. This reimagined model redefines how modern processors can handle latency and concurrency with greater efficiency and reliability.

A simple time counter is used to deterministically set the exact time of when instructions should be executed in the future. Each instruction is dispatched to an execution queue with a preset execution time based on resolving its data dependencies and availability of resources — read buses, execution units and the write bus to the register file. Each instruction remains queued until its scheduled execution slot arrives. This new deterministic approach may represent the first major architectural challenge to speculation since it became the standard.

The architecture extends naturally into matrix computation, with a RISC-V instruction set proposal under community review. Configurable general matrix multiply (GEMM) units, ranging from 8×8 to 64×64, can operate using either register-based or direct-memory acceess (DMA)-fed operands. This flexibility supports a wide range of AI and high-performance computing (HPC) workloads. Early analysis suggests scalability that rivals Google’s TPU cores, while maintaining significantly lower cost and power requirements.

Rather than a direct comparison with general-purpose CPUs, the more accurate reference point is vector and matrix engines: Traditional CPUs still depend on speculation and branch prediction, whereas this design applies deterministic scheduling directly to GEMM and vector units. This efficiency stems not only from the configurable GEMM blocks but also from the time-based execution model, where instructions are decoded and assigned precise execution slots based on operand readiness and resource availability.

Execution is never a random or heuristic choice among many candidates, but a predictable, pre-planned flow that keeps compute resources continuously busy. Planned matrix benchmarks will provide direct comparisons with TPU GEMM implementations, highlighting the ability to deliver datacenter-class performance without datacenter-class overhead.

Critics may argue that static scheduling introduces latency into instruction execution. In reality, the latency already exists — waiting on data dependencies or memory fetches. Conventional CPUs attempt to hide it with speculation, but when predictions fail, the resulting pipeline flush introduces delay and wastes power.

The time-counter approach acknowledges this latency and fills it deterministically with useful work, avoiding rollbacks. As the first patent notes, instructions retain out-of-order efficiency: “A microprocessor with a time counter for statically dispatching instructions enables execution based on predicted timing rather than speculative issue and recovery," with preset execution times but without the overhead of register renaming or speculative comparators.

Why speculation stalled

Speculative execution boosts performance by predicting outcomes before they’re known — executing instructions ahead of time and discarding them if the guess was wrong. While this approach can accelerate workloads, it also introduces unpredictability and power inefficiency. Mispredictions inject “No Ops” into the pipeline, stalling progress and wasting energy on work that never completes.

These issues are magnified in modern AI and machine learning (ML) workloads, where vector and matrix operations dominate and memory access patterns are irregular. Long fetches, non-cacheable loads and misaligned vectors frequently trigger pipeline flushes in speculative architectures.

The result is performance cliffs that vary wildly across datasets and problem sizes, making consistent tuning nearly impossible. Worse still, speculative side effects have exposed vulnerabilities that led to high-profile security exploits. As data intensity grows and memory systems strain, speculation struggles to keep pace — undermining its original promise of seamless acceleration.

Time-based execution and deterministic scheduling

At the core of this invention is a vector coprocessor with a time counter for statically dispatching instructions. Rather than relying on speculation, instructions are issued only when data dependencies and latency windows are fully known. This eliminates guesswork and costly pipeline flushes while preserving the throughput advantages of out-of-order execution. Architectures built on this patented framework feature deep pipelines — typically spanning 12 stages — combined with wide front ends supporting up to 8-way decode and large reorder buffers exceeding 250 entries

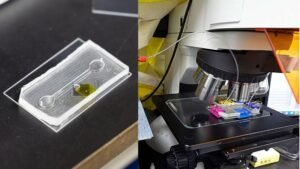

As illustrated in Figure 1, the architecture mirrors a conventional RISC-V processor at the top level, with instruction fetch and decode stages feeding into execution units. The innovation emerges in the integration of a time counter and register scoreboard, strategically positioned between fetch/decode and the vector execution units. Instead of relying on speculative comparators or register renaming, they utilize a Register Scoreboard and Time Resource Matrix (TRM) to deterministically schedule instructions based on operand readiness and resource availability.

Figure 1: High-level block diagram of deterministic processor. A time counter and scoreboard sit between fetch/decode and vector execution units, ensuring instructions issue only when operands are ready.

A typical program running on the deterministic processor begins much like it does on any conventional RISC-V system: Instructions are fetched from memory and decoded to determine whether they are scalar, vector, matrix or custom extensions. The difference emerges at the point of dispatch. Instead of issuing …

Konten dipersingkat otomatis.

🔗 Sumber: venturebeat.com

📌 TOPINDIATOURS Update ai: Studio Ghibli Demands That OpenAI Stop Ripping Off Its

After Sora 2 was used to relentlessly churn out depictions of Japanese anime and video game characters, the creators of those characters are striking back.

On October 28, a group representing Studio Ghibli, Bandai Namco, Square Enix, and other major Japanese publishers submitted a written request to OpenAI demanding that it stop using their copyrighted content to train the video generating AI tool.

The move, as first reported by Automaton, is the latest example of Japan signaling protectiveness of its art and media against an AI industry that catapulted itself to extraordinary heights by devouring copyrighted works en masse without permission or compensation.

In a statement, the group, called the Content Overseas Distribution Association (CODA), said that it had determined Sora 2 is able to generate outputs that “closely resembles Japanese content or images” because this content was used as training data.

Therefore, in cases “where specific copyrighted works are reproduced or similarly generated as outputs,” CODA said, it “considers that the act of replication during the machine learning process may constitute copyright infringement.”

The launch of Sora 2 proved to be yet another generative AI tool with an irreverent attitude toward copyright law, only one that was designed to instantly feed into an endless TikTok-style scroll of short-form vertical videos. Here, there was no pretending that OpenAI’s goal was to do anything other than serve up slop.

Recognizable characters like SpongeBob were often parodied with the AI, and perhaps none more than Japanese characters across various franchises. Many Sora videos featured Pokemon, including one showing a deepfaked OpenAI CEO Sam Altman grilling a dead Pikachu, and another showing Altman gazing at a flock of Pokemon frolicking across a field, before grimacing into the camera and saying, “I hope Nintendo doesn’t sue us.”

The real Sam Altman, in fact, has acknowledged his fans’ affinity for ripping off Japanese art, though without mentioning the outrage this caused.

“In particular, we’d like to acknowledge the remarkable creative output of Japan,” he wrote in a blog post following the launch of Sora 2.”We are struck by how deep the connection between users and Japanese content is!”

This “deep” connection goes back a while. When OpenAI released new image generation capabilities for ChatGPT in March, it spawned a mega-viral trend of using the tool to generate images imitating the style of the legendary Japanese animation house Studio Ghibli, including “Ghiblified” selfies of yourself. Altman consecrated the trend by creating his own Ghibli-style portrait, which is still his profile picture on social media to this day.

It’s no surprise, then, that Japan has been feeling a little on edge about OpenAI’s attitude towards copyright. Mid-October, the Japanese government made a formal request asking it to stop ripping off the nation’s beloved characters. Minoru Kiuchi, the minister of state for IP and AI strategy, called manga and anime “irreplaceable treasures.”

Initially, OpenAI signaled that copyright holders would have to manually opt out of having their works cribbed by Sora, but then reversed course after the launch and said that they would be opted out by default. Crucially, this was only after it piggybacked the virality of using copyrighted characters to shoot the app to the top of Apple’s App store.

CODA also doesn’t seem to consider this a satisfactory measure. In the statement, the group notes that “under Japan’s copyright system, prior permission is generally required for the use of copyrighted works, and there is no system allowing one to avoid liability for infringement through subsequent objections.”

The CODA members have requested the following: that their content isn’t used for AI training without permission, and that OpenAI “responds sincerely to claims and inquiries from CODA member companies regarding copyright infringement related to Sora 2’s outputs.”

More on OpenAI: Racist Influencers Using OpenAI’s Sora to Make it Look Like Poor People Are Selling Food Stamps for Cash

The post Studio Ghibli Demands That OpenAI Stop Ripping Off Its Work appeared first on Futurism.

🔗 Sumber: futurism.com

🤖 Catatan TOPINDIATOURS

Artikel ini adalah rangkuman otomatis dari beberapa sumber terpercaya. Kami pilih topik yang sedang tren agar kamu selalu update tanpa ketinggalan.

✅ Update berikutnya dalam 30 menit — tema random menanti!