📌 TOPINDIATOURS Breaking ai: Oldest piece made of hippopotamus ivory reveals prehi

Researchers at the University of Barcelona have identified the oldest-known piece made of hippopotamus ivory in the Iberian Peninsula.

Found at the Bòbila Madurell site near Barcelona, one of Europe’s most significant prehistoric settlements, the object carved out of hippopotamus ivory dates to the second quarter of the third millennium BC.

The study, published in the Journal of Archaeological Science: Reports, re-examined the museum artifact using advanced techniques to glean clues about its function. What they discovered, however, turned out to be much more revealing than they had anticipated.

The hippopotamus ivory did not originate locally, so it would have traveled an impressive distance to get to Spain, likely originating from the Nile Valley or Africa, as per Phys.org. Alongside other exotic materials like Baltic amber and imported flint, this object reveals the extensive reach of prehistoric trade networks across the western Mediterranean.

Special hippo ivory

As the Bòbila Madurell artifact was housed in the Museu d’Història de Sabadell, researchers at the Prehistoric Studies and Research Seminar (SERP) of the University of Barcelona had decided to reexamine the rare and precious artifact carved, remarkably, from hippopotamus ivory.

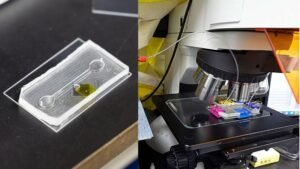

Using a combination of Fourier transform infrared spectrometry (FTIR), microscopic analysis, and traceology, researchers identified that the object was carved from the first lower incisor of a hippopotamus. It measures just over 4 inches long and 1/2 inch wide.

However, the object isn’t a new discovery. It was first documented in 1977. Archaeologists back then found it in a semi-subterranean dwelling in Bòbila Madurell near Barcelona.

Spanning approximately 28 hectares, the Neolithic and Copper Age settlement is one of Europe’s most iconic sites. The Véraza occupied this territory, a culture with an extensive reach from southern France to northeastern Iberia between 3600 and 2100 BCE.

As the ivory object even resembles a tool, researchers discovered it along with ceramic spindle whorls, leading them to consider the possibility that it might have been a weaving tool or beater rather than a decorative or ritual object, According to Ancient Origins, However, they haven’t ruled out other possibilities.

A tool, figurine, or?

After carefully studying its wear patterns and red pigment residues as per Ancient Origins, researchers concluded that whoever did shape the object put considerable time and effort into it, even going so far as to polish it, suggesting that it held value. The traces of red pigment found on the object were composed of iron oxyhydroxides mixed with animal fat or blood.

Though researchers hypothesize ritualistic use, the location where it was discovered might point to its use as a tool in textile production. The red stains might have been an organic binder, according to Phys.org.

The material of hippopotamus ivory continues to confound researchers as a precious and rare resource for the region, opening up another, if not more revealing, window onto the prehistoric past.

“The finding opens the door to consider possible long-distance exchange networks and to consider the role of this and other exotic materials in the growing social complexity of the Iberian Peninsula during the Chalcolithic, also known as the Copper Age,” as per Phys.org.

An ancient, global world

The presence of hippopotamus ivory in Iberia suggests that trade networks extended far beyond the western Mediterranean. African and Asian elephant ivory reached southern Iberia through North African routes, but hippopotamus ivory was rarer and may have traveled along the northern Mediterranean maritime network, as per the study.

According to Phys.org, the discovery challenges previous assumptions about the reach of Copper Age trade and offers a new lens through which to study prehistoric Iberian society. The study demonstrates the value of reexamining museum collections with the most advanced technology available today, as this artifact even reveals an ancient globalized world.

Ancient Origins, to conclude, points to the need for further research to glean deeper insights into the trade routes and interactions that occurred between prehistoric cultures across the region. So the Bòbila Madurell artifact stands as tangible proof of the connectivity, technological sophistication, and cultural complexity of Chalcolithic communities in northeastern Iberia.

Read the study in Journal of Archaeological Science: Reports.

🔗 Sumber: interestingengineering.com

📌 TOPINDIATOURS Breaking ai: MIT Researchers Unveil “SEAL”: A New Step Towards Sel

The concept of AI self-improvement has been a hot topic in recent research circles, with a flurry of papers emerging and prominent figures like OpenAI CEO Sam Altman weighing in on the future of self-evolving intelligent systems. Now, a new paper from MIT, titled “Self-Adapting Language Models,” introduces SEAL (Self-Adapting LLMs), a novel framework that allows large language models (LLMs) to update their own weights. This development is seen as another significant step towards the realization of truly self-evolving AI.

The research paper, published yesterday, has already ignited considerable discussion, including on Hacker News. SEAL proposes a method where an LLM can generate its own training data through “self-editing” and subsequently update its weights based on new inputs. Crucially, this self-editing process is learned via reinforcement learning, with the reward mechanism tied to the updated model’s downstream performance.

The timing of this paper is particularly notable given the recent surge in interest surrounding AI self-evolution. Earlier this month, several other research efforts garnered attention, including Sakana AI and the University of British Columbia’s “Darwin-Gödel Machine (DGM),” CMU’s “Self-Rewarding Training (SRT),” Shanghai Jiao Tong University’s “MM-UPT” framework for continuous self-improvement in multimodal large models, and the “UI-Genie” self-improvement framework from The Chinese University of Hong Kong in collaboration with vivo.

Adding to the buzz, OpenAI CEO Sam Altman recently shared his vision of a future with self-improving AI and robots in his blog post, “The Gentle Singularity.” He posited that while the initial millions of humanoid robots would need traditional manufacturing, they would then be able to “operate the entire supply chain to build more robots, which can in turn build more chip fabrication facilities, data centers, and so on.” This was quickly followed by a tweet from @VraserX, claiming an OpenAI insider revealed the company was already running recursively self-improving AI internally, a claim that sparked widespread debate about its veracity.

Regardless of the specifics of internal OpenAI developments, the MIT paper on SEAL provides concrete evidence of AI’s progression towards self-evolution.

Understanding SEAL: Self-Adapting Language Models

The core idea behind SEAL is to enable language models to improve themselves when encountering new data by generating their own synthetic data and optimizing their parameters through self-editing. The model’s training objective is to directly generate these self-edits (SEs) using data provided within the model’s context.

The generation of these self-edits is learned through reinforcement learning. The model is rewarded when the generated self-edits, once applied, lead to improved performance on the target task. Therefore, SEAL can be conceptualized as an algorithm with two nested loops: an outer reinforcement learning (RL) loop that optimizes the generation of self-edits, and an inner update loop that uses the generated self-edits to update the model via gradient descent.

This method can be viewed as an instance of meta-learning, where the focus is on how to generate effective self-edits in a meta-learning fashion.

A General Framework

SEAL operates on a single task instance (C,τ), where C is context information relevant to the task, and τ defines the downstream evaluation for assessing the model’s adaptation. For example, in a knowledge integration task, C might be a passage to be integrated into the model’s internal knowledge, and τ a set of questions about that passage.

Given C, the model generates a self-edit SE, which then updates its parameters through supervised fine-tuning: θ′←SFT(θ,SE). Reinforcement learning is used to optimize this self-edit generation: the model performs an action (generates SE), receives a reward r based on LMθ′’s performance on τ, and updates its policy to maximize the expected reward.

The researchers found that traditional online policy methods like GRPO and PPO led to unstable training. They ultimately opted for ReST^EM, a simpler, filtering-based behavioral cloning approach from a DeepMind paper. This method can be viewed as an Expectation-Maximization (EM) process, where the E-step samples candidate outputs from the current model policy, and the M-step reinforces only those samples that yield a positive reward through supervised fine-tuning.

The paper also notes that while the current implementation uses a single model to generate and learn from self-edits, these roles could be separated in a “teacher-student” setup.

Instantiating SEAL in Specific Domains

The MIT team instantiated SEAL in two specific domains: knowledge integration and few-shot learning.

- Knowledge Integration: The goal here is to effectively integrate information from articles into the model’s weights.

- Few-Shot Learning: This involves the model adapting to new tasks with very few examples.

Experimental Results

The experimental results for both few-shot learning and knowledge integration demonstrate the effectiveness of the SEAL framework.

In few-shot learning, using a Llama-3.2-1B-Instruct model, SEAL significantly improved adaptation success rates, achieving 72.5% compared to 20% for models using basic self-edits without RL training, and 0% without adaptation. While still below “Oracle TTT” (an idealized baseline), this indicates substantial progress.

For knowledge integration, using a larger Qwen2.5-7B model to integrate new facts from SQuAD articles, SEAL consistently outperformed baseline methods. Training with synthetically generated data from the base Qwen-2.5-7B model already showed notable improvements, and subsequent reinforcement learning further boosted performance. The accuracy also showed rapid improvement over external RL iterations, often surpassing setups using GPT-4.1 generated data within just two iterations.

Qualitative examples from the paper illustrate how reinforcement learning leads to the generation of more detailed self-edits, resulting in improved performance.

While promising, the researchers also acknowledge some limitations of the SEAL framework, including aspects related to catastrophic forgetting, computational overhead, and context-dependent evaluation. These are discussed in detail in the original paper.

Original Paper: https://arxiv.org/pdf/2506.10943

Project Site: https://jyopari.github.io/posts/seal

Github Repo: https://github.com/Continual-Intelligence/SEAL

The post MIT Researchers Unveil “SEAL”: A New Step Towards Self-Improving AI first appeared on Synced.

🔗 Sumber: syncedreview.com

🤖 Catatan TOPINDIATOURS

Artikel ini adalah rangkuman otomatis dari beberapa sumber terpercaya. Kami pilih topik yang sedang tren agar kamu selalu update tanpa ketinggalan.

✅ Update berikutnya dalam 30 menit — tema random menanti!